As well as a copy of his daughter,

a news anchor:

And more recently just a beautiful woman who (because I know you want to know) is 1/4 non-Japanese.

In his talk he made a couple interesting points:

1. We can still tell the difference between the human and robot copy because of the small, unconscious movements humans make. They did an experiment where they revealed the robot from behind a curtain for around 3 seconds. If the robot sat perfectly still, pretty much everyone rated it as "robot". However, if they made the robot move her eyes to the side, much like an embarrassed woman getting stared at would, the majority rated "human".

2. When you're controlling the robot remotely ("telepresence", they call it), you really feel like you embody the robot. They tried putting a female operator behind the controls of Hiroshi Ishiguro's robot. She could see through his camera, similar to playing a first-person shooter. Then (the real) Dr. Ishiguro put his arm around his robot-clone. The operator squirmed and objected, as if he were really putting his arm around her!

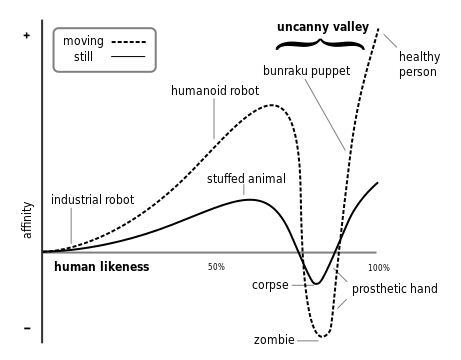

3. His first few robots sit squarely at the bottom of the uncanny valley.

He was very honest and open about it. Actually Dr. Ishiguro was way cooler than the YouTube videos make him out to be :P

4. Read his book. *ahem* Haha, what a joker. But if you can't read Japanese, he recommends a movie called Surrogates (he has a cameo in it, but that's not why!). Indeed, I watched the movie and it truly paints a picture of the near future. What happens if humans get a chance to spend their days in a surrogate robot body? Can you imagine someone saying "Wow, you really look like your surrogate!" -- not the other way around. What if your main identity was not YOU? And how about surrogate "hacking": "I know this robot body looks Japanese, but it's really me in here, me, Angelica!"

And now for some perspective...

Robot telepresence is already being explored by -- of course -- the military, who use remote-controlled robots to disable landmines. On the civilian front, Willow Garage has a robo-telepresence project for the workplace, and MIT-lab have a small, crab-like telepresence robot that allow you to make gestures with its claws. At the Japan National Institute of Advanced Industrial Science and Technology, HRP-4C both looks human (or like a famous virtual pop star with green hair), and can WALK. It's not quite at the telepresence stage yet though, as far as I know.

But I'd say there is still a big hurdle to wanting to "embody" one of these.

One big problem is navigating the thing. Who wants to use a joystick to move around? How much can you really see around you, even with multiple cameras? Maybe we could use an automatic buffer system so that the robot always stays 50 cm from the wall, but it seems more like attacking the symptom with a blunt hammer.

On the other hand, natural movements of the head and mouth can already be controlled using a simple video camera and image processing, as described in my video on the Kokoro Android from last year:

Or you could go directly to brain-machine interfaces:

Interface, interface, interface. In our lab we'll be starting to address some of these issues. It's kind of scary to think about where your research could lead the future though... when I got into robotics, I thought the idea of Artificial Intelligence was so far-fetched that I would never see it in my lifetime.

Now I see that it's not AI that'll be the next big thing in robotics. It's real people, real intelligence. Same implications?

3 comments:

So...are you building the Borg or Skynet?

Skynet.

Cool stuff! Sounds like a great opportunity to hear him talk.

Post a Comment