I wrote some reports on the various summer schools I attend last month in Europe. Might as well share them with the world, so I'll be uploading them as time allows :) Enjoy!

Biology-inspired embodied learning

On the first day of the summer school, we learned about walking hexopods, such as the one above, created at CITEC in Bielefeld.

Florentin Wörgötter introduced how they could replicate the human / animal behavior of Central Pattern Generators (i.e., even when a cat's legs are disconnected from the brain, the spinal cord CPGs allow the legs to move in a walking motion).

To escape holes, they use a chaotic rather than periodic pattern for the leg movement. They discussed how insects walk, and suggested that even with out cognition, limbs can inform other limbs for a local intelligence. E.g., each leg can tell its load from both a) its own sensor getting a lot of force b) adjacent sensors getting less force.

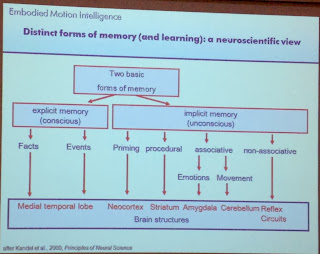

He also presented Kandel's Principles of Neural Science, from which they model the 3-5 second working memory:

We did a hands-on tutorial on memory. We learned about retrieval induced forgetting -- that active remembering can create forgetting of related material. Episodic memories are processed by the hippocampus.

We performed an EEG experiment where I put on a 32-sensor cap and then look at a series of pictures. I was asked to remember them if followed by a certain symbol, and forget them if followed by another. By averaging the EEG results over many trials, we could nullify the random noise and find event-related potentials (ERPs) that show where the brain is recalling memory: explicit remembering happens in the parietal cortex, P3.

To pass time between trials and to ensure I was not practicing the memory task, the experimenter "tricked" me by asking me to do a speed test, basically finding as fast as possible a pattern of lines.

Speech acquisition

Larissa Samuelson talked about her child psychology experiments and proposed that we ground words in space. It seemed that kids learned new names for objects (e.g. momi for binoculars) by associating labels with objects in space, not time. Other associate cues like color were not as important as location. Indeed, people index by spatial locations to recall facts (like on a test, we remember the fact's location in a book, though can't recall the fact itself).

Katharina J. Rohlfing overviewed what can enhance memories?

In a tutorial by Roman Klinger, we learned about probabilistic graphical models and did an exercise to design a graphical model for epilepsy, using wikipedia to look up causes and effects. We made one variable for different related causes, and ordered them from cause to effect. We then used the software SamIAm to make the model and probability tables. We learned how a Markov Chain can be used to model transition probabilities, e.g. to generate novel music from lots of examples.

Markov Random Fields are like the directed graphical model but undirected. It describes probabilities of value settings, like the 0-1 binary tables we used to do. The instructor showed how Markov Random fields can be used for sentiment analysis, where each word is a feature. Want to learn about Probabilistic Graphical Models? The full slides are here.

Knowledge-based systems

Michael Beetz of Bremen University showed the state of the art in pancake flipping robots. His goal is to engineer useful, knowledge-based systems based on internet-available knowledge. For example, this robot looked up recipes on wikihow, understood the semantic meaning of instructions (eg. Flip the pancake over means to use the spatula). He also showed a video from Stanford of a robot cleaning a room - arranging cushions, magazines on the table, and putting blocks away.

It was very impressive, and then he said it was remote-controlled by a human! It shows that we cannot blame hardware for lack of progress -- we are limited by software.

In the Probabilistic Graphical Models tutorial, we continued learning about conditional random fields, and linear chain Markov models (which is a subset of CRF, though often used interchangeably in papers on text.) We learned about how to model problems using undirected graphs with output nodes, and how learning happens by estimating lambda parameters based on data.

The advantage of CRF is that we can model how non-adjacent data can influence your output. For example, in an image of a lizard, we can increase the probability that a pixel is part of the lizard if an adjacent pixel is known to be lizard. Training can be done using techniques such as Viterbi. For recognition we can use either Gibbs sampling (which is "sampling through the network" using probability tables and previously fixed variables) or belief propagation.

Lifelong, continuous learning

Pierre-Yves Oudeyer from the INRIA Flowers team (FLOW = state of being engrossed in an activity at just the right level, ERS = Epigenetic Robotics and Systems). He spoke about developmental mechanisms for autonomous life long learning in humans and robots, e.g. the famous Talking Heads experiment led by Luc Steels. In this experiment, two robots which consisted of mounted cameras would play language games. For example, one robot would say wabadee while looking at a wall of coloured objects. The other robot would try to guess the referent. And through this they could develop a language.

Developmental robotics is related to linguistics, developmental neuroscience, and developmental psychology. Autonomous life long learning is important because we would like robots to adapt to user's needs and intentions. It takes time, and the robot needs to find its own data. This is different from typical machine learning which is fast, and where the data is supplied by the human.

He also showed off the new Poppy humanoid robot, whose parts can be machine printed and takes only 2 days to assemble. It has a passive walking mechanism and costs 6000-7000 euros.

Pierre-Yves says that there are basic forms of motivation, such as food/water, maintenance of physical integrity, and social bonding. Babies may be optimally interested in things to learn which are optimally difficult, so the trick is to look at the derivative of the error. In other words, babies explore activities where learning happens (ie. where error is reduced) maximally fast!

He presents the idea of goal babbling: a baby should not waste time on goals that are impossible, e.g. trying to touch a wall 3 metres away. The goal babbling result should also be transferred from one space/environment to another. We should also take into account the fact that our perception capabilities evolve too. For example, the visual field of a baby is a lot narrower than an adults.

To be continued...

Biology-inspired embodied learning

On the first day of the summer school, we learned about walking hexopods, such as the one above, created at CITEC in Bielefeld.

Florentin Wörgötter introduced how they could replicate the human / animal behavior of Central Pattern Generators (i.e., even when a cat's legs are disconnected from the brain, the spinal cord CPGs allow the legs to move in a walking motion).

To escape holes, they use a chaotic rather than periodic pattern for the leg movement. They discussed how insects walk, and suggested that even with out cognition, limbs can inform other limbs for a local intelligence. E.g., each leg can tell its load from both a) its own sensor getting a lot of force b) adjacent sensors getting less force.

He also presented Kandel's Principles of Neural Science, from which they model the 3-5 second working memory:

We did a hands-on tutorial on memory. We learned about retrieval induced forgetting -- that active remembering can create forgetting of related material. Episodic memories are processed by the hippocampus.

We performed an EEG experiment where I put on a 32-sensor cap and then look at a series of pictures. I was asked to remember them if followed by a certain symbol, and forget them if followed by another. By averaging the EEG results over many trials, we could nullify the random noise and find event-related potentials (ERPs) that show where the brain is recalling memory: explicit remembering happens in the parietal cortex, P3.

To pass time between trials and to ensure I was not practicing the memory task, the experimenter "tricked" me by asking me to do a speed test, basically finding as fast as possible a pattern of lines.

Speech acquisition

Larissa Samuelson talked about her child psychology experiments and proposed that we ground words in space. It seemed that kids learned new names for objects (e.g. momi for binoculars) by associating labels with objects in space, not time. Other associate cues like color were not as important as location. Indeed, people index by spatial locations to recall facts (like on a test, we remember the fact's location in a book, though can't recall the fact itself).

Katharina J. Rohlfing overviewed what can enhance memories?

- Sleep - perhaps information is downscaled during sleep, or re-activated while sleeping

- Retrieval - actively retrieving the memory strengthens the connection

- Familiar context, because a familiar context can lighten the cognitive load while seeing something new to learn

In a tutorial by Roman Klinger, we learned about probabilistic graphical models and did an exercise to design a graphical model for epilepsy, using wikipedia to look up causes and effects. We made one variable for different related causes, and ordered them from cause to effect. We then used the software SamIAm to make the model and probability tables. We learned how a Markov Chain can be used to model transition probabilities, e.g. to generate novel music from lots of examples.

Markov Random Fields are like the directed graphical model but undirected. It describes probabilities of value settings, like the 0-1 binary tables we used to do. The instructor showed how Markov Random fields can be used for sentiment analysis, where each word is a feature. Want to learn about Probabilistic Graphical Models? The full slides are here.

Knowledge-based systems

Michael Beetz of Bremen University showed the state of the art in pancake flipping robots. His goal is to engineer useful, knowledge-based systems based on internet-available knowledge. For example, this robot looked up recipes on wikihow, understood the semantic meaning of instructions (eg. Flip the pancake over means to use the spatula). He also showed a video from Stanford of a robot cleaning a room - arranging cushions, magazines on the table, and putting blocks away.

It was very impressive, and then he said it was remote-controlled by a human! It shows that we cannot blame hardware for lack of progress -- we are limited by software.

In the Probabilistic Graphical Models tutorial, we continued learning about conditional random fields, and linear chain Markov models (which is a subset of CRF, though often used interchangeably in papers on text.) We learned about how to model problems using undirected graphs with output nodes, and how learning happens by estimating lambda parameters based on data.

The advantage of CRF is that we can model how non-adjacent data can influence your output. For example, in an image of a lizard, we can increase the probability that a pixel is part of the lizard if an adjacent pixel is known to be lizard. Training can be done using techniques such as Viterbi. For recognition we can use either Gibbs sampling (which is "sampling through the network" using probability tables and previously fixed variables) or belief propagation.

Lifelong, continuous learning

Pierre-Yves Oudeyer from the INRIA Flowers team (FLOW = state of being engrossed in an activity at just the right level, ERS = Epigenetic Robotics and Systems). He spoke about developmental mechanisms for autonomous life long learning in humans and robots, e.g. the famous Talking Heads experiment led by Luc Steels. In this experiment, two robots which consisted of mounted cameras would play language games. For example, one robot would say wabadee while looking at a wall of coloured objects. The other robot would try to guess the referent. And through this they could develop a language.

Developmental robotics is related to linguistics, developmental neuroscience, and developmental psychology. Autonomous life long learning is important because we would like robots to adapt to user's needs and intentions. It takes time, and the robot needs to find its own data. This is different from typical machine learning which is fast, and where the data is supplied by the human.

He also showed off the new Poppy humanoid robot, whose parts can be machine printed and takes only 2 days to assemble. It has a passive walking mechanism and costs 6000-7000 euros.

Pierre-Yves says that there are basic forms of motivation, such as food/water, maintenance of physical integrity, and social bonding. Babies may be optimally interested in things to learn which are optimally difficult, so the trick is to look at the derivative of the error. In other words, babies explore activities where learning happens (ie. where error is reduced) maximally fast!

He presents the idea of goal babbling: a baby should not waste time on goals that are impossible, e.g. trying to touch a wall 3 metres away. The goal babbling result should also be transferred from one space/environment to another. We should also take into account the fact that our perception capabilities evolve too. For example, the visual field of a baby is a lot narrower than an adults.

To be continued...

No comments:

Post a Comment